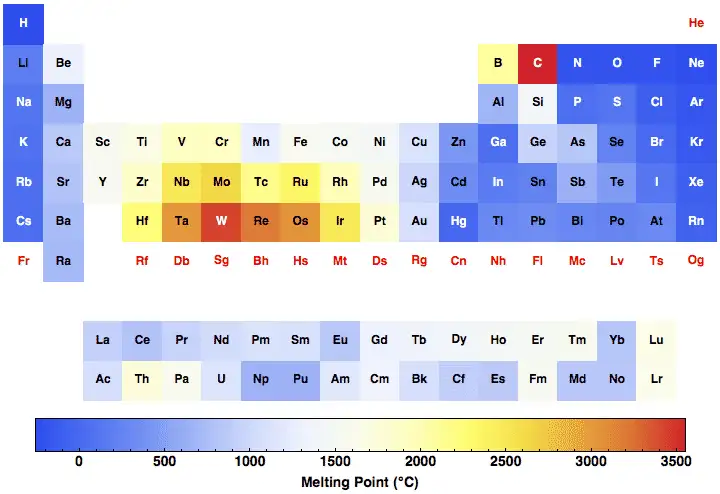

Pure iron (Fe) has a normal melting point of 1538 °C (1811 K / 2800 °F) at one atmosphere; this is the temperature where δ-ferrite (high-temperature body-centered cubic iron) becomes liquid. This value is the accepted reference used in metallurgical handbooks and thermochemical databases.

What the melting point means for a metal

When metallurgists say "melting point" for a pure metal they normally mean the equilibrium temperature at which the crystalline solid and liquid coexist at 1 atmosphere — strictly the normal melting point (or normal freezing point). For engineering alloys — including steels and cast irons — there is usually a melting range between a solidus (first liquid appears on heating) and a liquidus (last solid dissolves into liquid). Treat numerical melting numbers for metals as reference values that apply to very pure, equilibrium conditions; real-world materials typically behave differently.

Pure iron: numerical values and authoritative datasets

The commonly accepted normal melting point of elemental iron is 1538 °C (1811 K / 2800 °F) at standard atmospheric pressure. This value is reported by national and international data sources used by engineers and scientists (NIST, CRC, ASM, PDG/LBL, major chemical handbooks). The normal boiling point for iron at 1 atm is about 2860–2862 °C (≈3134 K); different references round slightly differently depending on measurement and evaluation method.

Load-bearing numeric facts:

-

Normal melting point (pure Fe): 1538 °C (1811 K / 2800 °F).

-

Typical literature spread: ≈1535–1539 °C depending on experimental method and purity.

-

Latent heat (fusion) near the melting point: ≈247 kJ/kg (reported recommended average).

Iron allotropes and phase transitions that lead into melting

Iron exhibits multiple crystalline forms (allotropes) with temperature:

| Allotrope | Symbol | Crystal structure | Stable temperature range (approx., 1 atm) |

|---|---|---|---|

| Alpha iron | α-Fe | BCC (ferrite) | up to 912 °C |

| Gamma iron | γ-Fe | FCC (austenite) | 912 °C → 1394 °C |

| Delta iron | δ-Fe | BCC (high-T ferrite) | 1394 °C → 1538 °C (melting) |

| Liquid | L | — | > 1538 °C (for pure Fe) |

The solid → solid transitions (α↔γ around 912 °C and γ↔δ around 1394 °C) are critical because the δ phase is the one that melts at the 1538 °C reference. These transition temperatures are well documented in phase diagrams and handbooks.

Why the single number (1538 °C) is useful — and when it isn't

That single number is extremely helpful for comparison and for basic calculations (thermodynamics, furnace set-points, and historically in teaching). However, using 1538 °C uncritically can mislead:

-

Alloys: Adding carbon, silicon, nickel, chromium or other elements shifts solidus/liquidus widely.

-

Impurities: Sulfur, phosphorus, oxygen and slag phases produce eutectic points below the pure-Fe melting temperature.

-

Non-equilibrium heating: Rapid heating, superheating, or undercooling cause transient behavior that departs from equilibrium melting.

-

Pressure: High pressure shifts melting temperature (relevant for geophysics and high-pressure experiments).

Therefore, engineers working with steel, cast iron, or specialized alloys should consult phase diagrams and manufacturer data for solidus/liquidus values rather than the single pure-Fe number.

How carbon affects iron’s melting temperature

Carbon lowers the melting point of iron when it forms solutions or carbides. The iron–carbon system (Fe–C) is the basis of steel metallurgy; some practical ranges follow:

| Material | Typical carbon (wt%) | Typical solidus/liquidus / melting behavior (approx.) |

|---|---|---|

| Pure iron | 0.00 | 1538 °C (single value) |

| Low-carbon steel | 0.05–0.25 | Solidus ≈ 1450–1500 °C, Liquidus ≈ 1500–1540 °C (varies) |

| Medium-carbon steel | 0.25–0.60 | Solidus ≈ 1420–1490 °C, Liquidus ≈ 1480–1530 °C |

| High-carbon steel / tool steels | 0.6–2.0 | Solidus can drop further; eutectic features if other alloying present |

| Cast iron (white/gray) | 2.0–4.0 | Complex melting with eutectics; liquidus often 1150–1250 °C (graphite, carbide dependent) |

Note: the cast-iron liquidus/solidus values vary strongly with composition and foundry practice — many cast irons melt at substantially lower furnace temperatures than pure iron because of eutectics and graphite formation. Fractory and Fe–C phase diagrams provide detailed boundaries.

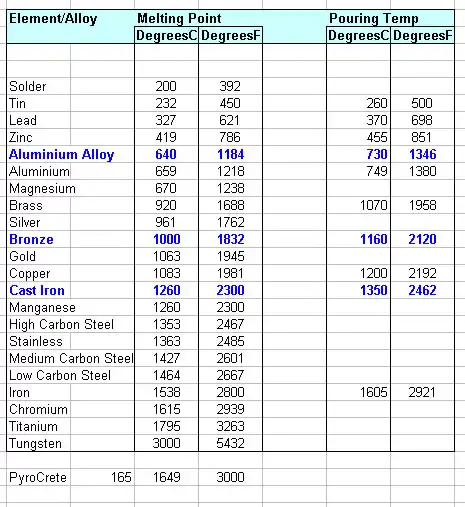

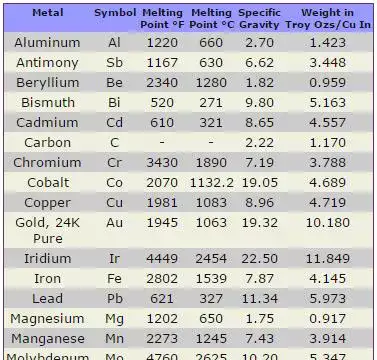

Quick comparison: melting points of common metals (reference table)

| Element / material | Melting point (°C) | Melting point (°F) |

|---|---|---|

| Iron (Fe, pure) | 1538 | 2800 |

| Copper (Cu) | 1084 | 1983 |

| Aluminum (Al) | 660.3 | 1220 |

| Nickel (Ni) | 1455 | 2651 |

| Chromium (Cr) | 1907 | 3465 |

| Titanium (Ti) | 1668 | 3034 |

| (Data from standard handbooks used in engineering.) |

Measurement methods and practical considerations

Measuring a metal’s melting temperature requires careful technique. Common methods include:

-

Differential Scanning Calorimetry (DSC) / Differential Thermal Analysis (DTA): Measures heat flow and detects endothermic melting peaks; excellent for small samples and alloys.

-

High-temperature optical pyrometry with crucible method: For refractory metals, melting can be detected visually or by emissivity-corrected pyrometry.

-

Electrostatic levitation + laser heating: Used in specialized research to avoid contamination and crucible reactions; useful for superheated liquids and undercooled freezing studies.

-

Static/analytical methods: Drop or immersion techniques historically used in metallurgy labs.

Each method has uncertainty sources: sample purity, crucible-sample interactions, atmosphere (oxygen can oxidize and change apparent melting behavior), heating rate, and calibration. For rigorous data, consult primary standard laboratories (NIST, national metrology institutes).

Thermophysical properties around melting

Important thermophysical properties that matter near the melting point (values approximate for pure Fe):

-

Latent heat of fusion: ≈ 247 kJ/kg (energy required to melt 1 kg at the melting point).

-

Density change: Metals typically decrease density on melting; for iron the change is material- and phase-dependent.

-

Heat capacity: Cp rises with temperature; accurate Cp vs T is required for furnace energy estimates (NIST and handbooks provide fitted Shomate or polynomial coefficients).

Engineers use these properties to size furnaces, compute energy consumption for smelting, and model solidification in casting simulations.

Industrial implications — smelting, casting, welding and heat treatment

Practical impacts of iron’s melting behavior include:

-

Foundry practice: Foundries set furnace and ladle temperatures to exceed the liquidus for the specific alloy; for many steels this means maintaining ~1550–1600 °C in an electric arc or induction furnace for proper superheat and ladle transfer. Cast iron melts at lower temperatures in many cases.

-

Welding: Local melting in welding requires control to avoid excessive dilution, burn-through or embrittlement from alloying elements.

-

Heat treatment: Although heat treatments seldom approach melting, the critical transformation temperatures (e.g., A1, A3) that determine microstructure occur far below melting, so precise phase diagrams and TTT/CCT data are used.

-

Additive manufacturing (metal AM): Melting and solidification rates matter strongly for microstructure — print parameters are tuned relative to melting characteristics.

Good practice: always work from the material’s documented solidus/liquidus and the foundry or mill’s recommended furnace temperatures, not only the pure-Fe melting number.

Pressure and extreme conditions

At ambient pressures the 1538 °C reference holds. Under high pressure, melting behavior shifts (important in geophysics and high-pressure materials research). Experimental and computed phase diagrams at GPa pressures show melting temperature can increase with pressure for Fe; such shifts are outside normal industrial relevance but crucial for earth-core modeling and high-pressure devices. Specialized publications and NIST/PDG summaries document pressure-dependent melting lines.

Common mistakes and how to avoid them

-

Using pure-Fe number for alloys: leads to wrong furnace settings. Always use material-specific data.

-

Confusing melting point with solidus/liquidus: for alloys these are ranges, not single values.

-

Ignoring atmosphere and contamination: oxidation or crucible reactions alter melting behavior. Use inert gases or vacuum if needed.

-

Relying on non-calibrated thermocouples or pyrometers: calibration traceable to standards is essential for accurate temperatures.

Practical tables for engineers

Representative solidus/liquidus ranges (engineering approx.)

| Material family | Example composition | Approx. solidus (°C) | Approx. liquidus (°C) | Notes |

|---|---|---|---|---|

| Pure iron | 0% C | 1538 (single) | 1538 | Reference pure Fe |

| Low-carbon steel | 0.05–0.25% C | 1450–1500 | 1500–1540 | Depends on alloying |

| Stainless steel (austenitic) | Fe-18Cr-8Ni | ~1390–1450 | ~1450–1520 | Ni/Cr raise or lower local melting behavior |

| Gray cast iron | 2–4% C (graphite) | ~1150 | ~1200–1250 | Eutectic features with graphite |

FAQs

-

Q: What is the melting point of iron?

A: 1538 °C (1811 K / 2800 °F) for elemental iron at 1 atm. -

Q: Does steel melt at the same temperature as pure iron?

A: No. Steel is an alloy and exhibits a melting range (solidus to liquidus) that depends on carbon and alloy content; many steels melt somewhat below or near the pure-Fe number. -

Q: Why do foundries use furnace temperatures above 1538 °C?

A: Furnaces maintain superheat above the liquidus to ensure good fluidity, time for degassing, and to compensate for heat losses during transfer. Typical steel heats are often kept at ~1550–1600 °C depending on alloy and process. -

Q: How does carbon concentration influence melting?

A: Carbon lowers melting points via eutectic reactions; cast irons (high C) typically have much lower liquidus temperatures than pure iron. See Fe–C phase diagrams. -

Q: Can impurities raise the melting point?

A: Some elements raise and some lower the liquidus; for example chromium and tungsten typically raise high-temperature strength and can increase melting behavior in certain alloys, while carbon and sulfur lower some melting boundaries. Exact effects depend on composition. -

Q: How accurate is 1538 °C?

A: Different authoritative sources round slightly; experimental values may vary by a few °C depending on purity and measurement method. Typical reported spread is ~1535–1539 °C. -

Q: What is latent heat of fusion for iron?

A: Approximately 247 kJ/kg, used for energy budgeting for melting. -

Q: Does atmospheric oxygen change the effective melting behavior?

A: Yes, oxidation, formation of slag and oxide layers, and crucible reactions can change the apparent melting behavior and must be controlled with fluxes, slag management, or inert atmospheres. -

Q: Are there standard references for melting points?

A: Yes! NIST WebBook, CRC Handbook, ASM handbooks and PDG/LBL tables are widely used for reliable thermophysical data. -

Q: Where can I find solidus/liquidus for a specific industrial steel grade?

A: Consult the steel producer’s datasheet, standard references (e.g., ASM, published Fe–C and multi-component phase diagrams), or thermodynamic databases (e.g., Thermo-Calc, FactSage) for accurate phase boundary data.

Final notes for practitioners

-

Use the 1538 °C figure only as a baseline for pure iron.

-

For process control, always rely on material-specific solidus/liquidus, mill/foundry datasheets, and calibrated instrumentation.

-

For publication or design work, cite authoritative databases (NIST/CRC/ASM) and state the conditions (pressure, atmosphere, composition) under which temperatures apply.

Authoritative references

- NIST Chemistry WebBook — Iron (Fe) thermochemical and condensed-phase data

- PubChem / NCBI — Iron (element) summary and physical data (includes CRC references)

- Particle Data Group / LBL — Fe (iron) atomic and phase data (melting/boiling temps)

- ASM International — Metals handbooks and chapters on pure metal properties (requires access)

- Fe–C phase diagram resources and explanatory material (academic/handbook summaries)